In the ever-evolving field of Artificial Intelligence (AI), companies are looking skilled AI developers who can harness the power of AI to transform businesses. Whether you’re an aspiring AI developer or an employer looking to hire, understanding the most commonly asked AI developer interview questions can give you an edge. In this guide, we cover over 50+ AI developer interview questions, ranging from basic to expert level, along with their answers.

This article is your one-stop solution for preparing or assessing candidates in the AI field, with questions specifically specialized to various skill levels. If you’re an employer, this guide will provide insight into identifying top talent. If you’re a candidate, these questions will help you pass your next AI developer interview.

Let’s dive into the questions, starting with the basics and moving to more advanced topics.

Artificial Intelligence (AI) is the field of computer science focused on creating systems capable of performing tasks that typically require human intelligence, such as problem-solving, decision-making, and language understanding. AI can be classified into narrow AI (designed for specific tasks) and general AI (with human-like capabilities across a wide range of tasks).

2. Can you explain the difference between AI, Machine Learning, and Deep Learning?

| Aspect | Natural Language Processing (NLP) | Natural Language Understanding (NLU) |

| Scope | A broader field encompassing all tasks related to language | Subset of NLP focused on understanding the meaning of language |

| Tasks Involved | Includes text translation, tokenization, sentiment analysis | Involves extracting meaning, intent, and context from language |

| Goal | Process and manipulate human language | Comprehend the significance and purpose of human communication. |

| Example | Converting speech to text, text summarization | Identifying the intent of a customer inquiry in a chatbot |

TensorFlow is an open-source platform created by Google for machine learning and AI development. It’s widely used because it makes building, training, and deploying AI models easier, especially for deep learning tasks.

Here are some applications of supervised machine learning in modern businesses:

These applications drive efficiency and improve decision-making across various industries.

7. What are Database Keys and their Types?

Common keys in a relational database include:

Weak AI (Narrow AI)

Weak AI performs specific tasks without genuine understanding:

Strong AI (General AI)

Strong AI, still theoretical, would mimic human intelligence and understanding:

LSTM stands for Long Short-Term Memory, a type of recurrent neural network (RNN) used for time-series data like speech and video.

Activation functions regulate if a neuron should respond. Common activation functions include:

These functions are critical in AI basic interview questions, especially for deep learning models.

A data cube is a multi-dimensional array of data used in data warehousing, allowing for quick analysis of data across different dimensions like time, location, or product.

Convolutional Neural Networks (CNNs) are a type of deep learning model designed for image and spatial data processing. They use convolutional layers to detect patterns like edges and shapes in images. CNNs are widely used in image classification, object detection, facial recognition, medical imaging, and self-driving cars. Their ability to recognize visual patterns makes them highly effective in these applications.

Popular AI platforms include TensorFlow, PyTorch, IBM Watson, and Google Cloud AI. These platforms provide tools for building and deploying AI models.

Common programming languages for AI include Python, R, Java, and C++. Python is the most popular due to its vast library support for machine learning and AI tasks.

18. What is transfer learning?

Transfer learning is a machine learning approach in which a model developed for one task is adapted for a different but related task. This method is especially beneficial when there is a scarcity of data for the new task. Transfer learning is commonly used in image recognition and natural language processing.

19. What is the difference between batch gradient descent and stochastic gradient descent?

Understanding these methods is crucial in interview questions and answers for artificial intelligence, as they affect the speed and performance of training models.

Regularisation is a technique used to prevent overfitting in machine learning models by adding a penalty for larger model parameters. The two main types are:

21. What is Artificial Super Intelligence (ASI)?

Artificial Super Intelligence (ASI) refers to a level of AI that surpasses human intelligence in all aspects, including creativity, problem-solving, and emotional understanding. Unlike current AI systems, which excel at specific tasks (narrow AI), ASI would be capable of outperforming humans in virtually every field, from scientific research to social interactions. It represents the theoretical peak of AI development, where machines possess intelligence far superior to the smartest human brains.

The bias-variance tradeoff is a key concept in machine learning that refers to the balance between two types of errors that affect a model’s performance:

23. Explain gradient descent

Gradient descent is an optimization algorithm used to minimize a function by adjusting its parameters step by step. It calculates the gradient (slope) of the function at a point, and then moves in the opposite direction of the gradient to find the lowest value (minimum). This process repeats until the function reaches its minimum point.

Cross-validation is a technique to assess the performance of machine learning models by repeatedly splitting datasets into training and test sets. The most common form is k-fold cross-validation, where the data is split into k subsets, and the model is trained and tested k times, each time using a different subset as the test set. Cross-validation helps prevent overfitting and ensures that the model performs well on unseen data.

A generative model learns how data is distributed and can generate new data (e.g., Naive Bayes, GANs).

A discriminative model focuses on classifying data by learning the decision boundary between classes (e.g., Logistic Regression, SVM).

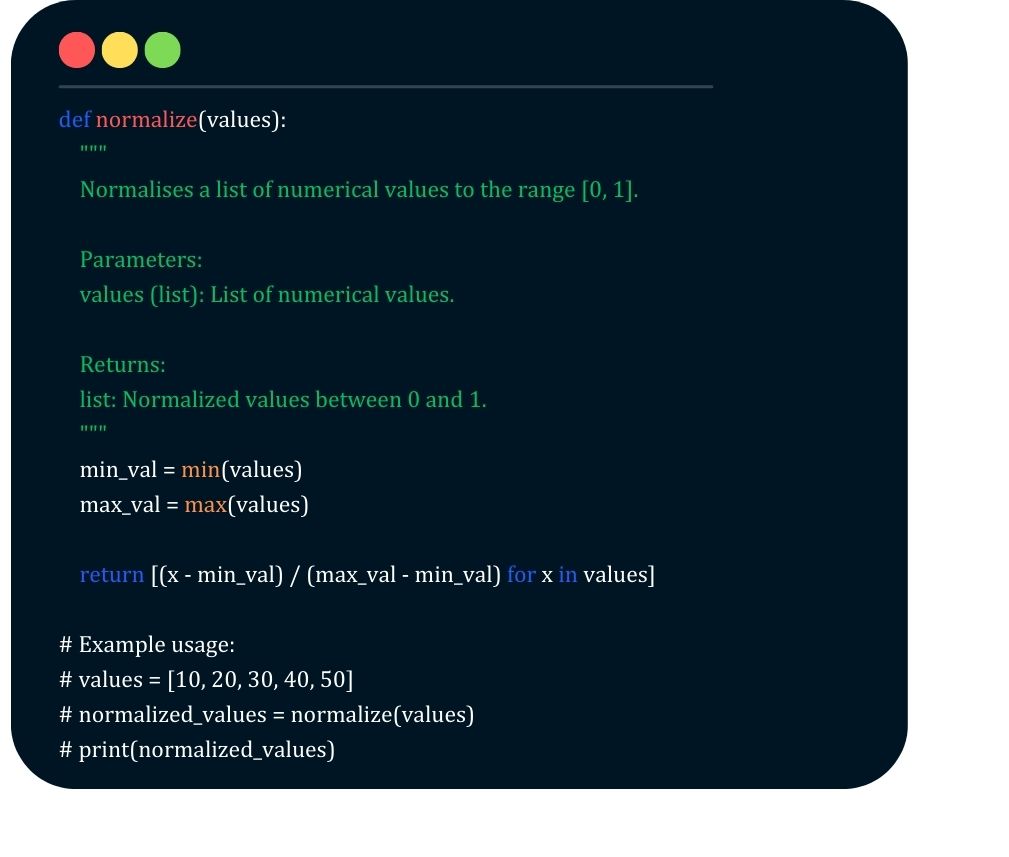

26. What is the purpose of data normalization?

The purpose of data normalization is to scale numerical data into a standard range, typically between 0 and 1. This helps improve the performance and stability of machine learning algorithms by ensuring that features contribute equally and preventing bias toward larger values.

27. Name some activation functions.

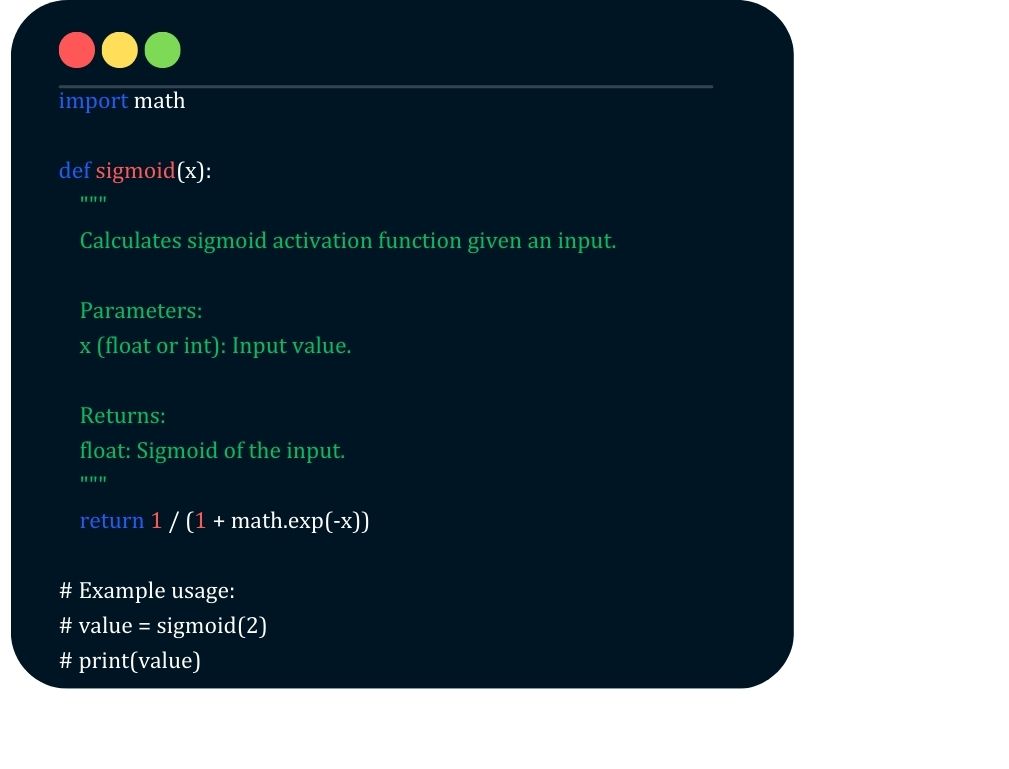

Sigmoid: Maps input values into a range between 0 and 1, making it useful for binary classification. However, it can cause vanishing gradients in deep networks.

ReLU (Rectified Linear Unit): Outputs zero for any negative input and the input itself for positive values, helping networks learn faster. But, it can “die” when neurons output zero for all inputs (dying ReLU problem).

Softmax: Converts raw scores into probabilities, with values summing to 1. It’s commonly used in the output layer of neural networks for multi-class classification problems.

28. What is the difference between L1 and L2 regularization?

L1 and L2 regularization are techniques used to prevent overfitting by adding a penalty to the loss function:

CNNs are designed to process structured grid data like images. The key components are:

30. Briefly explain data augmentation

Data augmentation is a technique used to increase the diversity and amount of training data by applying transformations like rotation, flipping, scaling, or cropping to existing data. This helps improve model performance, prevent overfitting, and make the model more robust by simulating variations that the model may encounter in real-world scenarios.

31. Explain forward propagation and backpropagation

Forward propagation is the process in a neural network where input data is passed through the layers, with each neuron applying weights, biases, and activation functions to produce an output. This output is used to make predictions or classifications based on the input data.

Backpropagation is the process of adjusting the weights and biases in the network based on the error between the predicted output and the actual target. The error is propagated backward through the network, and the weights are updated using gradient descent to minimize the loss, improving the model’s accuracy.

Activation functions introduce nonlinearity into neural networks, enabling it to learn complex patterns. Common activation functions include:

33. Explain cost function

Cost functions assess the difference between a model’s predicted output and its actual target value. It helps guide the model’s training by minimizing this difference to improve accuracy.

34. What are BFS and DFS algorithms?

BFS (Breadth-First Search) explores nodes level by level, visiting all neighbors before moving deeper.

DFS (Depth-First Search) explores as far down a branch as possible before backtracking to explore other branches.

To handle imbalanced datasets, where one class significantly outnumbers others, you can:

36. What is the function of Hyperparameters?

Hyperparameters are settings or configurations that control the behavior of machine learning models and algorithms. Unlike model parameters, which are learned during training, hyperparameters are set before training and directly impact the model’s performance. Their main functions include:

37. What are some drawbacks of machine learning?

Machine learning models can require large datasets, be prone to overfitting, and lack interpretability. They also depend heavily on data quality and may struggle with unseen or biased data.

38. Explain the importance of cost/loss function

The cost/loss function is essential because it quantifies the error between the predicted and actual values, guiding the optimization process to adjust the model and improve accuracy during training.

A confusion matrix is a table used to assess the performance of classification models. It shows the number of true positives (TP), false positives (FP), true negatives (TN), and false negatives (FN), which helps compute metrics like accuracy, precision, recall, and F1-score.

42. Explain Sentimental analysis in NLP?

Sentiment analysis uses natural language processing (NLP) to determine the sentiment or emotion (positive, negative, or neutral) behind a piece of text, like reviews or social media posts.

The Softmax function is often used in the final layer of a neural network for multi-class classification problems. It converts the output of the neural network into a probability distribution over the classes. Each output value is transformed to lie between 0 and 1, and the sum of all output values will be 1, allowing the model to interpret the output as a probability distribution.

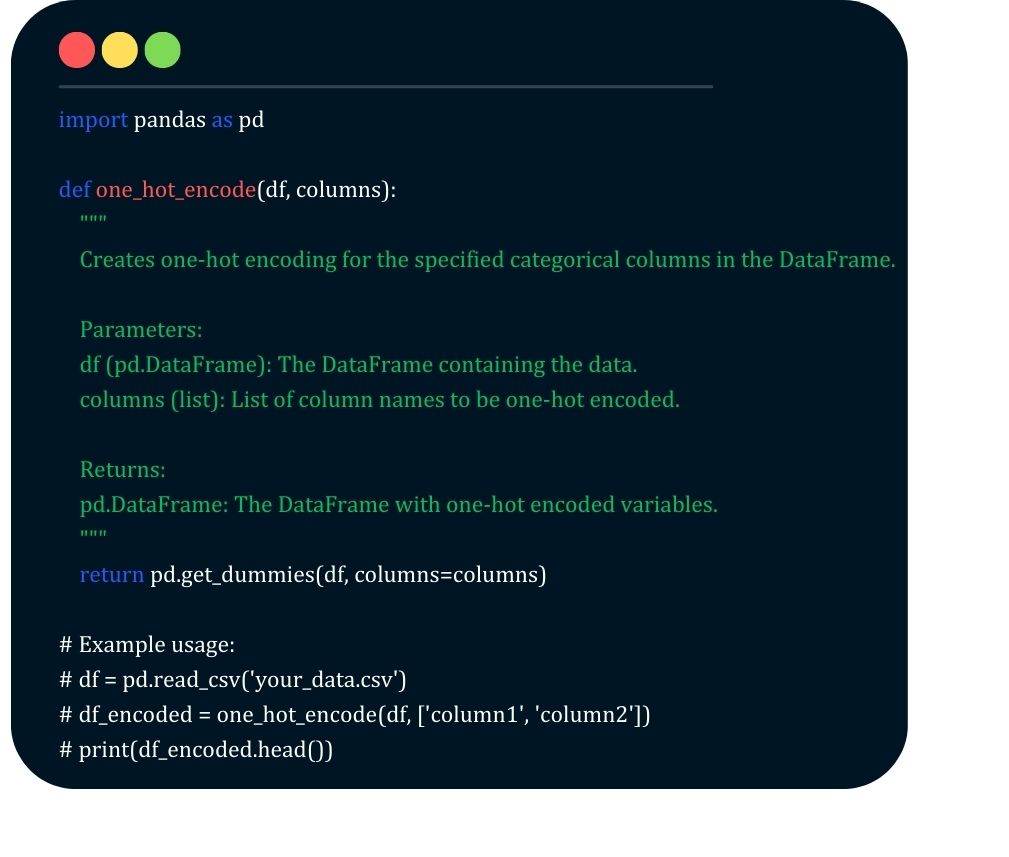

44. Write a function to create one-hot encoding for categorical variables in a Pandas DataFrame

Here’s a function to create one-hot encoding for categorical variables in a Pandas DataFrame:

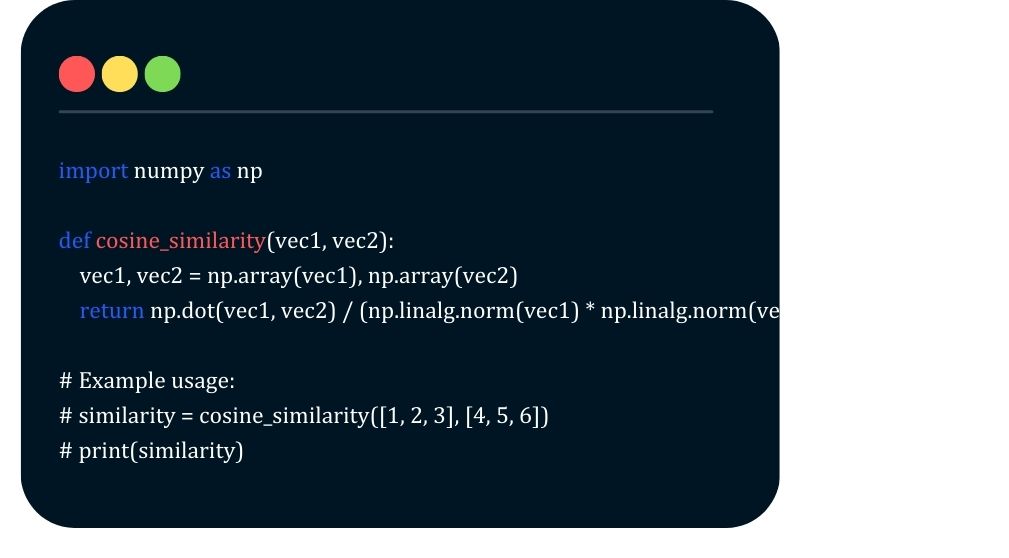

45. Implement a function to calculate cosine similarity between two vectors.

Here’s a shortened version of the cosine similarity function:

This simplified version removes comments and minimizes the function to its essential operations, while still handling the calculation of cosine similarity effectively.

Long Short-Term Memory (LSTM) networks are a type of recurrent neural network (RNN) designed to mitigate the vanishing gradient problem, which occurs when training traditional RNNs. LSTMs introduce memory cells and gating mechanisms (input, forget, and output gates) that allow the model to retain information over longer sequences without gradients diminishing to near zero. This enables the network to identify long-term dependencies.

48. Implement a function to normalize a given list of numerical values between 0 and 1.

Here’s a simple function to normalize a list of numerical values between 0 and 1

49. What is a Transformer model, and how does it work?

The Transformer is a neural network architecture designed for handling sequential data. Unlike RNNs, Transformers process input sequences in parallel, using self-attention mechanisms to capture dependencies between words or tokens in a sequence. The architecture consists of an encoder and a decoder, and it has been instrumental in the development of state-of-the-art NLP models like BERT and GPT.

50. How do you solve the vanishing gradient problem in RNN?

The vanishing gradient problem happens when neural networks struggle to learn long-term dependencies because the gradients get too small.

A solution is using LSTM networks. LSTM uses three gates for its input, forget and output functions. The forget gate helps decide which information to keep or discard, allowing the network to remember important data over time. This way, LSTMs can effectively handle both short-term and long-term memory, solving the vanishing gradient problem.

51. Implement a Python function to calculate the sigmoid activation function value for any given input.

Here’s a Python function to calculate the sigmoid activation function value for any given input:

Markov Chain Monte Carlo (MCMC) methods are a class of algorithms designed to generate random samples from probability distributions. MCMC constructs a Markov chain that has the desired distribution as its equilibrium distribution and uses the samples to approximate the distribution. MCMC is often used in Bayesian inference to estimate posterior distributions.

The Expectation-Maximization (EM) algorithm is used to find the maximum likelihood estimates of parameters in models with latent variables. It alternates between two steps:

The components of a Reinforcement Learning (RL) agent include:

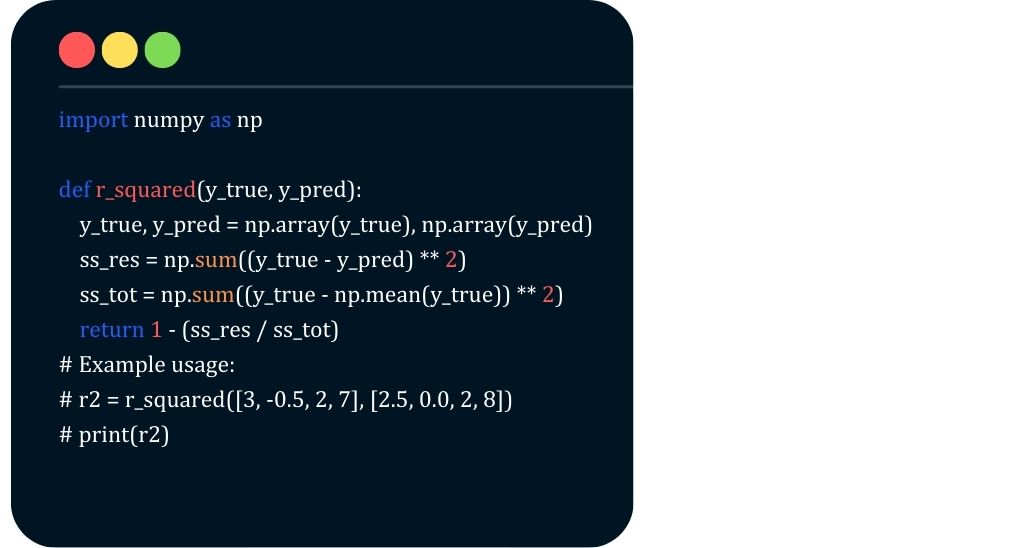

56. Write a Python function to calculate R-squared (coefficient of determination) given true and predicted values.

Here’s a Python function to calculate the R-squared (coefficient of determination) given the true and predicted values:

In the dynamic field of Artificial Intelligence, the demand for highly skilled developers continues to grow. This comprehensive guide of 50+ AI developer interview questions and answers, ranging from basic to expert levels, provides a valuable resource for both candidates preparing for AI roles and employers seeking to assess top talent. Whether you’re a beginner in AI or aiming to deepen your knowledge, these questions will help you grasp key concepts, tools, and techniques essential to the role of an AI developer.